This is a summary of the blog post from @Peter Voss with slight edits.

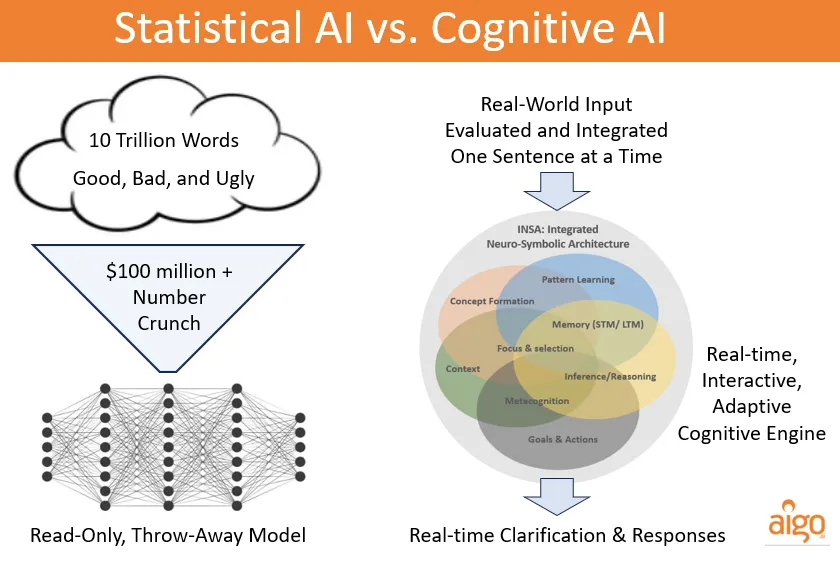

Statistical AI, including Deep Learning, Generative AI, and Large Language Models, relies fundamentally on backpropagation and gradient descent. This approach requires all training data to be available upfront, which is then processed through numerous calculation cycles to produce a model of statistical relationships. The result is frozen, read-only AI—the core model cannot be updated incrementally in real time. These systems are not adaptive to changes in the world and must be periodically discarded and retrained to remain current. A number of techniques are used to mitigate this key limitation.

Current models train on over 10 trillion words (good, bad, and ugly—without distinguishing between them). Using massive, power-hungry datacenters, they require weeks or months to train, costing hundreds of millions of dollars per run. Compare this to human intelligence: we learn language and reasoning with a few million words using a 20-watt brain, not 20 gigawatts.

State-of-the-art LLMs are impressive. Five years ago, few would have predicted that scaling up Generative Pre-trained Transformer (GPT) would provide such a compelling illusion of ‘intelligence’ with genuinely useful applications. While Statistical and Generative AI have shown us what AI may become, they fall short of true human-like intelligence, which understand and can reason. For that, we need Cognitive AI.

Cognitive AI is based on first principles of human cognition and intelligence. The defining characteristics needed to achieve human-level intelligence include the ability to learn and generalize in real time with potentially incomplete data while utilizing limited time and resources—the same constraints humans face in everyday life and advanced research. It also requires proactive, self-directed reasoning and decision-making without requiring human validation. Two key terms define this approach: real-world adaptive and autonomous.

Like humans, Cognitive AI processes one sentence or idea at a time. This proactive assessment can trigger clarification questions or additional research to build a coherent, contextually consistent world model and respond appropriately. The advantages of intelligently integrating new knowledge incrementally in real time are substantial: it reduces initial training data requirements a million-fold and computational costs by many orders of magnitude while enabling a focus on data quality over quantity. Autonomously validated knowledge and reasoning enhance reliability and accuracy while eliminating hallucinations. This approach, with a significantly smaller hardware footprint, paves the way for truly personal AI not beholden to mega-corporations.

Several historic limitations of cognitive architectures had to be overcome for Cognitive AI to work in the real world. These challenges have been addressed in at least two implementations: INSA (Integrated Neuro-Symbolic Architecture) and Active Inference AI. The only thing holding back more companies work on Cognitive AI is the current big-data statistical AI paradigm where tech giants are hiring majority of the AI talent. Once the statistical AI bubble implodes the next AI wave will be the Cognitive AI framework which is expected to finally bring human-level intelligence.

Images Credit: Peter Voss (https://petervoss.substack.com/p/cognitive-ai-vs-statistical-ai)

Why Neuro-Symbolic must be Integrated

https://petervoss.substack.com/p/why-neuro-symbolic-must-be-integrated

VERSES Active Inference Research

https://www.verses.ai/active-inference-research

How o3 and Grok 4 Accidentally Vindicated Neurosymbolic AI

https://garymarcus.substack.com/p/how-o3-and-grok-4-accidentally-vindicated

It’s going to be really bad’: Fears over AI bubble bursting grow in Silicon Valley

https://www.bbc.com/news/articles/cz69qy760weo

The AI bubble is heading towards a burst but it won’t be the end of AI