Inspired by the way the human retina works, NILEQ neuromorphic cameras don’t capture a series of images, but instead track changes in brightness across the sensor’s individual pixels. This generates far less data and operates at much higher speeds than a conventional camera. These cameras are ideal for environments without reliable Global Navigation Satellite Systems (GNSS) and where processing power is limited.

𝗔 𝗣𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗣𝗮𝗿𝘁𝗻𝗲𝗿𝘀𝗵𝗶𝗽: 𝗡𝗲𝘂𝗿𝗼𝗺𝗼𝗿𝗽𝗵𝗶𝗰 𝗖𝗮𝗺𝗲𝗿𝗮𝘀 𝗮𝗻𝗱 𝗜𝗠𝗨𝘀

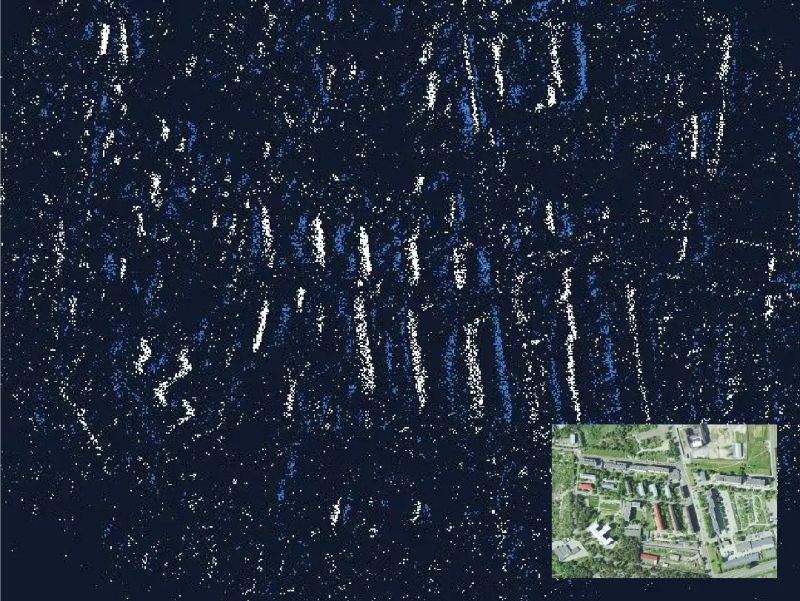

Combining Inertial Measurement Units (IMUs) with Neuromorphic camera data using sensor fusion, creates a robust navigation system capable of operating in environments where GNSS is unavailable. The technology tracks precise “terrain fingerprints” by analyzing brightness changes, comparing them against pre-loaded satellite imagery databases to correct IMU accuracy drift. The approach offers significant benefits: minimal computational overhead, low power consumption, and the ability to navigate in challenging environments.

𝗙𝗿𝗼𝗺 𝗘𝗮𝗿𝘁𝗵 𝘁𝗼 𝗦𝗽𝗮𝗰𝗲: 𝗘𝘅𝗽𝗮𝗻𝗱𝗶𝗻𝗴 𝗡𝗮𝘃𝗶𝗴𝗮𝘁𝗶𝗼𝗻 𝗛𝗼𝗿𝗶𝘇𝗼𝗻𝘀

Currently developed for drone navigation, this technology shows immense promise for space exploration. Potential applications include aerial navigation on the Moon and Mars where GNSS is not reliably available. The challenge for planetary surface navigation is converting aerial satellite imagery into a format that rovers can use to identify surface landmarks

Chris Shaw of Advanced Navigation states: “This approach of using the neuromorphic camera alongside low-cost, inexpensive inertial sensors, provides a big cost and size benefit.” The companies are planning to start flight trials of the neuromorphic navigation system later this year, with the goal of getting the product into customers hands by the middle of 2025.

𝗨𝘀𝗲 𝗼𝗳 𝗡𝗲𝘂𝗿𝗼𝗺𝗼𝗿𝗽𝗵𝗶𝗰 𝗖𝗮𝗺𝗲𝗿𝗮𝘀 𝗳𝗼𝗿 𝗡𝗮𝘃𝗶𝗴𝗮𝘁𝗶𝗼𝗻 𝗶𝗻 𝗘𝗻𝘃𝗶𝗿𝗼𝗻𝗺𝗲𝗻𝘁𝘀 𝘄𝗶𝘁𝗵𝗼𝘂𝘁 𝗿𝗲𝗹𝗶𝗮𝗯𝗹𝗲 𝗚𝗡𝗦𝗦